Unveiling OpenAI's Sora: The Revolutionary AI Shaping Video Creation Tomorrow.

Unveiling OpenAI’s Sora: The Revolutionary AI Shaping Video Creation Tomorrow.

Key Takeaways

- OpenAI Sora creates highly realistic video clips from text prompts, showcasing a major advancement in AI technology.

- Sora’s ability to simulate physics in videos accurately is a standout feature, but it still has some issues with interactions and object generation.

- The availability of Sora to the public is uncertain, as it is currently being tested for safety and quality before a firm release date is set.

The speed of AI development is heading towards a point beyond human comprehension, and OpenAI’s Sora text-to-video system is just the latest AI tech to shock the world into realizing things are happening sooner than anyone expected.

What Is OpenAI Sora?

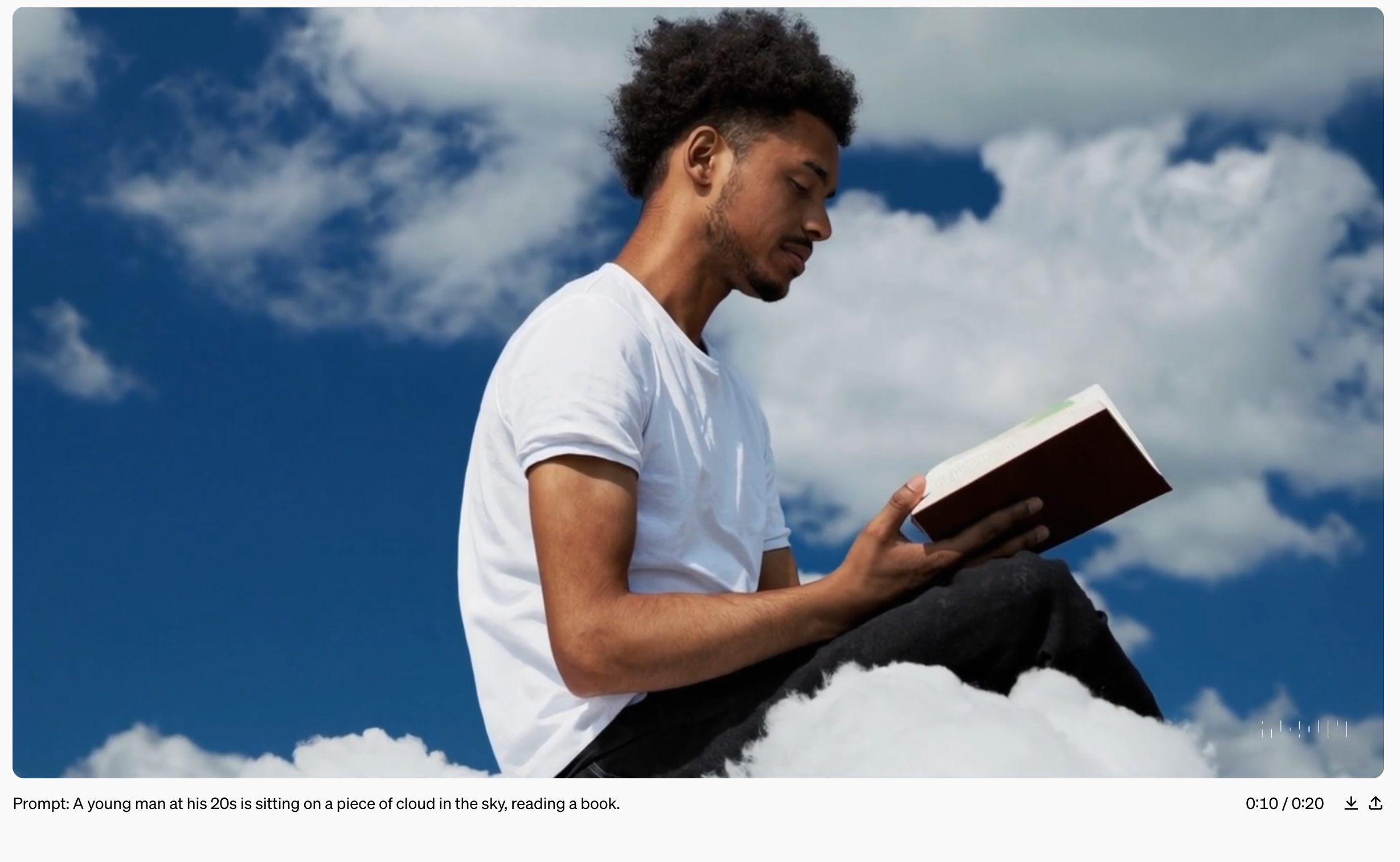

Like other generative AI tools such as DALL-E and MidJourney , Sora takes text prompts from you and converts them into a visual medium. However, unlike those aforementioned AI image generators, Sora creates a video clip complete with motion, different camera angles, direction, and everything else you’d expect from a traditionally-produced video.

Looking at the examples on the Sora website , the results are more often than not indistinguishable from real, professionally-produced video. Everything from high-end drone footage to multi-million dollar movie productions. Complete with AI-generated actors, special effects,the works.

Sora is of course not the first technology to do this. Until now, the most visible leader in this area was RunwayML , who do offer their services to the public for a fee. However, even under the best circumstances, Runway’s videos are more akin to the early generations of MidJourney still images . There’s no stability in the image, the physics doesn’t make sense, and as I write this, the longest clip length is 16 seconds.

In contrast, the best output that Sora has to show is perfectly stable, with physics that look right (to our brains at least), and clips can be up to a minute in length. The clips are completely devoid of sound, but there are already other AI systems that can generate music, sound effect, and speech. So I have no doubt those tools could be integrated into a Sora workflow, or at worst traditional voiceover and foley work.

It can’t be overstated what an enormous leap Sora represents from nightmarish AI video footage from just a year prior to the Sora demo. Such as the quite-disturbing AI Will Smith eating spaghetti . I think this is an even bigger shock to the system than when AI image generators went from a running joke to giving visual artists existential dread .

Sora is likely to impact the entire video industry from one-person stock footage makers all the way up to the level of Disney and Marvel mega-budget projects. Nothing will be untouched by this. I think this is especially true since Sora doesn’t have to create things whole-cloth, but can work on existing material, such as animating a still you’ve provided. This might be the true start of the synthetic movie industry .

How Does Sora Work?

We’re going to get a little under the hood of Sora as far as we can, but it’s not possible to go into that much detail. First, because OpenAI is ironically not open about the inner workings of their technology. It’s all proprietary and so the secret sauce that sets Sora apart from the competition is unknown to us in its precise details. Second, I’m not a computer scientist, you’re probably not a computer scientist, and so we can only understand how this technology works in broad general terms.

The good news is that there’s an excellent (paywalled) Sora explainer by Mike Young on Medium, based on a technical report from OpenAI that he’s broken down for us mere mortals to comprehend. While both documents are well worth reading, we can extract the most important facts here.

Sora is built on the lessons companies like OpenAI have learned when creating technologies like ChatGPT or DALL-E. Sora innovates how it’s trained on sample videos by breaking those videos up into “patches” which are analogous to the “tokens” used by ChatGPT’s training model. Because these tokens are all of equal size, things like clip length, aspect ratio, and resolution size don’t matter to Sora.

Sora uses the same broad transformer approach that powers GPT along with the diffusion method that AI image generators use. During training, it looks at noisy partly-diffused patch tokens from a video and tries to predict what the clean, noise-free token would look like. By comparing that to the ground truth, the model learns the “language” of video. Which is why the examples from the Sora website look so authentic.

Apart from this remarkable ability, Sora also has highly-detailed captions included for the video frames it’s trained on, which is a large part of why it’s able to modify the videos it generates based on text prompts.

Sora’s ability to accurately simulate physics in videos seems to be an emergent feature, which results simply from being trained on millions of videos that contain motion based on real-world physics. Sora has excellent object permanence, even when object leave the frame or are occluded by something else in-frame, they remain present and return unmolested.

However, it still has issues sometimes when things in the video interact, with causality, and with spontaneous object generation. Also, somewhat amusingly, Sora seems to confuse left with right from time to time. Nonetheless, what’s been shown so far is not only usable already, but absolutely state of the art.

When Will You Get Sora?

So we’re all extremely excited to get hands-on with Sora, and you can bet your bottom dollar I’ll be playing with it and writing up exactly how good this technology is when we’re not being shown hand-picked outputs, but how soon can this happen?

As of this writing, it’s unclear exactly how long it will be before Sora is available to the general public, or how much it will cost. OpenAI has stated that the technology is in the hands of the “red team”, which is the group of people who’s job is to try and make Sora do all the naughty things it’s not supposed to, and then help put guardrails up against that sort of thing happening when actual customers get to use it. This includes the potential to create misinformation, to make derogatory or offensive materials, and many more abuses one might imagine.

It’s also, as of this writing, in the hands of selected creators, which I suspect is both for testing purposes, and to get some third-party reviews and endorsements out as we lead up to its final release.

The bottom line is we don’t actually know when it will be available, in the same way you can just pay for and use DALL-E 3, and in reality even OpenAI doesn’t yet have a firm date. This is simply because if it’s in the hands of safety testers, they might uncover issues that take longer to fix than expected, which will push back a public release.

The fact that OpenAI feels ready to show off Sora and even take a few curated public prompts through X (formerly Twitter) simply means that the company thinks the quality of the final product is pretty much ready, but until there’s a better picture of public opinion, safety issues raised, and also safety issues discovered, no one can say for sure. I think we’re talking months rather than years, but don’t expect it next week.

Also read:

- [New] In 2024, Easy Ways to Screen Record on Dell Laptop

- Advanced VR Tech for Drone Enthusiasts

- Compatibility: Using AirPods on a Nintendo Console

- How To Fix Unresponsive Touch Screen on Motorola Moto G34 5G | Dr.fone

- In 2024, Unveiling the Premier 5 Windows Snipping Apps

- Speedy Conversion Techniques for Your SRT to TXT Tasks

- Step-by-Step Guide: Adding Lines Seamlessly Into Your Microsoft Word Documents

- Streamline Household Planning with These 7 Shared Family Calendar Apps

- The Essentials of Smartwatches – What Are They & Their Key Functions?

- Understanding the Transition to Electric Cars: What Does It Mean?

- Unveiling the Mechanisms That Govern YouTube After a Video Is Live for 2024

- Unveiling the Power of Slug Lines

- 무세약 M4R를 MP4로 자유성 전환기: Movavi의 쉬운 방법 - 인터넷 연결에서 사용하시오!

- Title: Unveiling OpenAI's Sora: The Revolutionary AI Shaping Video Creation Tomorrow.

- Author: Stephen

- Created at : 2024-12-07 12:28:07

- Updated at : 2024-12-11 08:02:14

- Link: https://tech-recovery.techidaily.com/unveiling-openais-sora-the-revolutionary-ai-shaping-video-creation-tomorrow/

- License: This work is licensed under CC BY-NC-SA 4.0.